Any webmaster worth his salt will sit up and take notice when Google issues a warning via Google Search Console (formerly Google Webmaster Tools). So it’s no surprise that when Google did just that last week, some webmasters went into a tailspin. The alert warned webmasters who were blocking the CSS and JavaScript on their websites via robots.txt that this issue could cripple their site’s ranking. Luckily, we are happy to report that the problem can easily be resolved with a little knowledge and knowhow. So if the e-mail below pops up in your inbox, don’t sweat it! We can help you keep your cool, even in this sweltering summer heat.

Warning from Google About Hiding CSS and JavaScript Files via Robots.txt

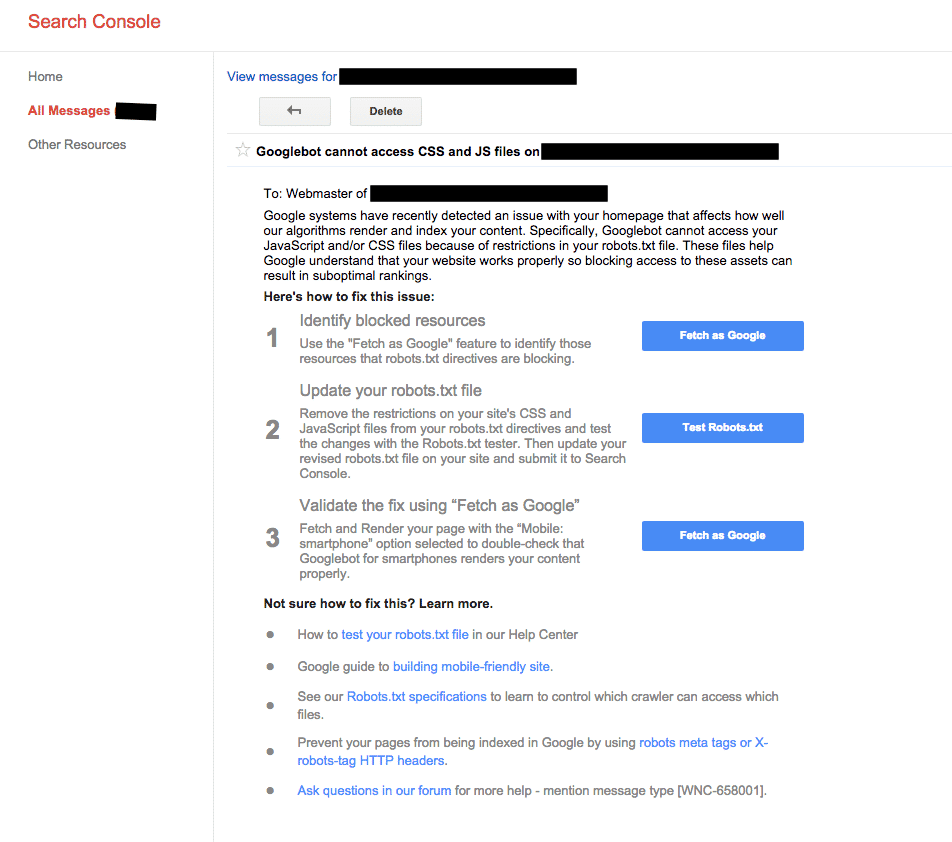

THE MESSAGE

If you aren’t able to read the text in the picture, here is the full warning:

“Google systems have recently detected an issue with your homepage that affects how well our algorithms render and index your content. Specifically, Googlebot cannot access your JavaScript and/or CSS files because of restrictions in your robots.txt file. These files help Google understand that your website works properly so blocking access to these assets can result in suboptimal rankings.”

This message is stating a fact that has been made clear many times now (by Matt Cutts in 2012, by an update to the Webmaster Guidelines in October, by the Fetch & Render tool’s recommendations). Basically, Google does not want webmasters to block their search bot’s ability to see a site’s CSS and JavaScript (JS). They want to be able to fully render a website and to see it in the same way that a human being does. If your CSS and JS are blocked, your mistake “can result in suboptimal rankings.” This isn’t a serious SEO issue, but the notification might alarm people who aren’t veteran SEOs.

THE ISSUE

Google can read your entire website these days, and it doesn’t react well when you decide to block information. To be sure that your site receives the ranking it deserves, be open with your site’s content and let the spiders see what all of your site’s viewers see.

In the past, webmasters using WordPress were sometimes advised to prevent Google’s spiders from accessing their wp-includes directory and plugins directory. However, this is no longer necessary. As a matter of fact, it could actually harm your website by blocking the CSS and JS that Googlebot needs to render your site correctly.

In addition, the old practice of blocking your /wp-admin/ folder is a bad idea, because if you block it but still link to it a few times, people can find it using the query “inurl:wp-admin.” However, if you leave it unblocked, WordPress will include a robots meta x-https header on admin pages, which will prohibit Google from showing the pages in search results.

THE SOLUTION

Tackling this issue may seem daunting at first, but it’s actually incredibly simple. All you need to do is edit your site’s robots.txt, removing any commands that block CSS and JS from Googlebot. If you see any of the following lines of code within your website’s robots.txt file, remove them:

- Disallow: /.js$*

- Disallow: /.inc$*

- Disallow: /.css$*

- Disallow: /.php$*

- Disallow: /wp-admin/ (for reasons noted above)

By removing these blockages, you’ll be providing Googlebot with the files it needs to fully render your site. After that, use the Fetch & Render tool to confirm that the bot is able to see the site in the same way that a human can see it. If it is still being blocked, the tool will let you know what further changes need to be made.

WNC-658001 (that’s the warning’s official and somewhat intimidating name, by the way) might have sent you into a tizzy at first, but we hope that we’ve quelled your fears. Following the simple instructions above, you can ensure that your website welcomes Googlebot with open arms.

Do you need help optimizing your site for Google’s spiders? You may want to contact 417 Marketing, an online marketing company based in Springfield, Missouri. We specialize in SEO (search engine optimization), and SEO-friendly web design. Click here to contact us.